[1.1.222] Preprocessor: inpaint_only+lama #1597

Replies: 28 comments 40 replies

-

|

Look good! |

Beta Was this translation helpful? Give feedback.

-

|

Seems like a nice improvement! As with the last update, I still seem to be getting nicer results when using this in combination with the reference preprocessor. When outpainting using only All examples directly below use the same parameters above, with the exception of the input images, and these settings for the model: original imageOriginal, highres was used from https://danbooru.donmai.us/posts/6196599. Lower res version below just to preview here. inpaint_only+lama AND reference_adain+attn @ 0.5 strength, 0.5 style fidelity (much better results, imo)I also tried using the same settings for the example in the OP but with the addition of the reference preprocessor, just to see if it helps at all. I verified I could reproduce the original results beforehand, so the only difference in this example below is the addition of the reference preprocessor. In this case, it actually makes the results worse. I'm guessing this has something to do with the reference image becoming expanded and cropped to a small, wide area in the center (due to the If I were to throw in my own two cents, I do think that ultimately no current publicly available models can fully handle illustrated artwork very well (not even Waifu Diffusion), so I don't think it's a fault of the system but the base models/data. I would love to be proven wrong though. Edit: To make my above statement more concrete, these are non-cherry picked outputs directly from Clipdrop's Uncrop. These are objectively the best results out of all my examples. I don't think any model weights publicly available can quite compete yet (Uncrop is using SDXL, as the blog post mentions, which has many advantages including being trained with RLHF, and is not yet available). I would test with Adobe Firefly as well but I believe outpainting can only be done in the app, which I do not own. SDXL has the promise of eventually being released though which is a plus. As an aside, giving an input prompt should always improve results (it does for me at least), but since the goal here is for promptless in/outpainting I think these comparisons are still important. |

Beta Was this translation helpful? Give feedback.

-

|

I found that if I enable inpaint, reference, and IP2P at the same time can have a very good inpaint result. Origin picture by AitasaiParameters I use1 girl wear a bikini Only use inpaint_only+lama (ControlNet is more important)As you can see, the pose of the girl change If add an openpose controlnet to control the pose?the pose is even stranger Use inpaint_only+lama (ControlNet is more important) + IP2P (ControlNet is more important)The pose of the girl is much more similar to the origin picture, but it seems a part of the sleeves has been preserved. The result is bad. Use inpaint_only+lama (ControlNet is more important) +reference_adain+attn (Balanced, Style Fidelity:0.5) + IP2P (ControlNet is more important) |

Beta Was this translation helpful? Give feedback.

-

|

Great stuff, do You guys plan to add also zits model which is superior to default lama ? it does wireframe and has better looking results, might not be that much of improvement when sd is generating image anyway but its definitely my default model in lama cleaner |

Beta Was this translation helpful? Give feedback.

-

|

Is ControlNet inpaint currently capable of inpainting transparent pixels (as in fixing a tilted photo) or accepting a custom inpaint mask? It seems to just stretch the border pixels unless I draw a mask myself. |

Beta Was this translation helpful? Give feedback.

-

|

Consistently getting color&contrast difference when outpainting, any ideas? |

Beta Was this translation helpful? Give feedback.

-

|

How do you get ControlNet 1.1.222? I just updated mine but it's only on v1.1.208 Edit: nvm got it to work, my settings were just off. |

Beta Was this translation helpful? Give feedback.

-

|

Yea, choosing between lama models would be nice. I like zits too. |

Beta Was this translation helpful? Give feedback.

-

|

So is txt2img inpainting possible without resulting in a blurry and/or seamed mask? What settings do you actually need to avoid that? |

Beta Was this translation helpful? Give feedback.

-

|

HELP. When I select Inpaint+ llama the model controlV11p inpaint... is not showing so I just left it at none. SInce the tutorial I watched said it'll get downloaded the first time I use inpaint+ llama. But it doesnt seem so. How'd you guys get that model? thanks |

Beta Was this translation helpful? Give feedback.

-

|

Hello Illyas, I hope people are supporting you through some patreon or something. or just donations. Thanks again |

Beta Was this translation helpful? Give feedback.

-

|

I expanded the image according to the Auther's settings, tried N times, the result is that the expanded part is not completely transparent, please give me some advice, thanks. |

Beta Was this translation helpful? Give feedback.

-

|

It looks like inpaint works well in txt2img and masking in small controlnet window but img2img or inpaint mode still gives result with lots of artifacts, How i can make img2img to work like txt2img ? |

Beta Was this translation helpful? Give feedback.

-

|

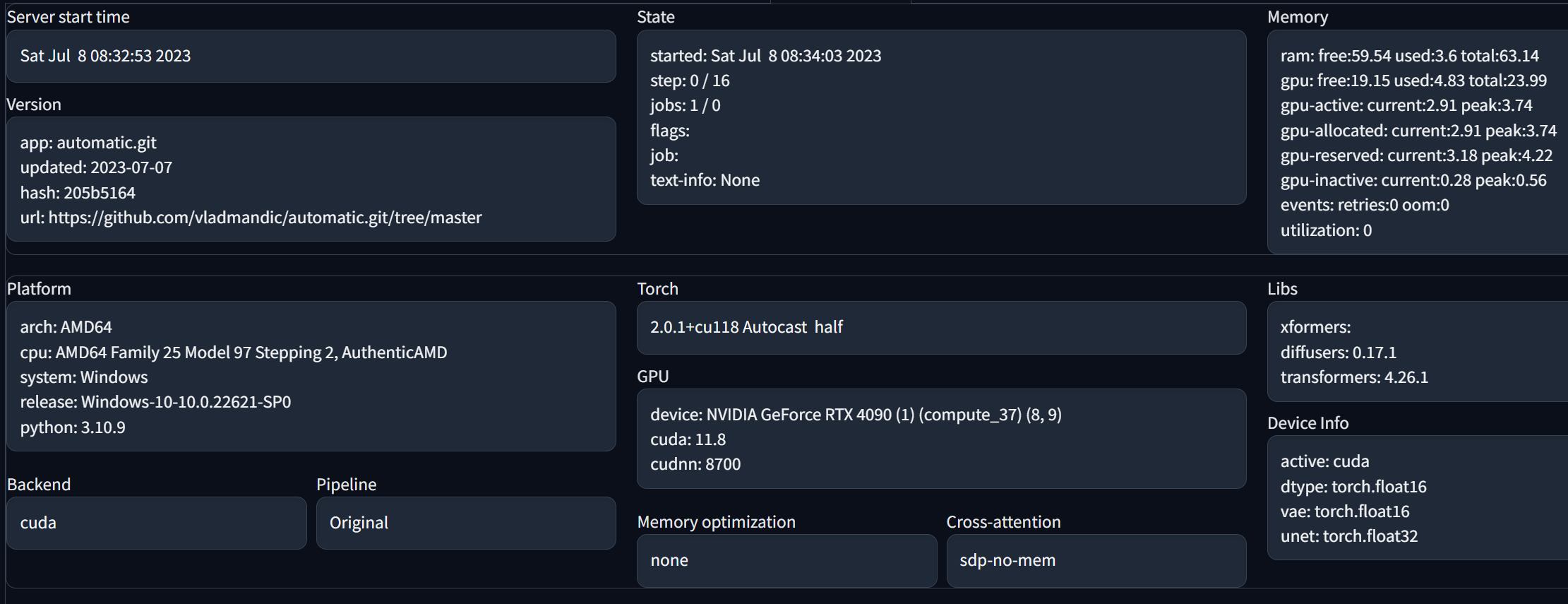

Inpaint + Lama is currently broken for me in Vladmantic's a1111 branch. Problem one: vladmandic/automatic#1603 - resize mode is greyed out Anyone any ideas about those? |

Beta Was this translation helpful? Give feedback.

-

|

Feature Request: txt2img inpainting should also have a mask upload. Would be easier to inpaint through mask instead of drawing the mask every time with a brush |

Beta Was this translation helpful? Give feedback.

-

|

I keep getting visible seams when using this for outpainting. Anyone got any tips on avoiding this please? |

Beta Was this translation helpful? Give feedback.

-

|

I have tried many times, the pic of your email, I have generated new pic of

a cat, and also generated other type of pic, all of them have this problem,

there is a clearly boundary between the extended part and the original pic,

the brighter and simple patterns is easer to see.

I just tested the function, not actually use it , the background of the pic

I used for testing was dark, so I didn't notice this problem, and I don't

know how to solve it.

|

Beta Was this translation helpful? Give feedback.

-

|

Is it possible to reproduce the |

Beta Was this translation helpful? Give feedback.

-

|

Can this be used to remove an object from a photo? |

Beta Was this translation helpful? Give feedback.

-

|

Hello @lllyasviel , this method is still lacking in term of control, I want a more effiscient method, can you check my request here: lllyasviel/ControlNet#508 |

Beta Was this translation helpful? Give feedback.

-

|

Would anyone be knowing on how to implement this in diffusers pipeline? (The Lama + Inpaint preprocessor majorly) |

Beta Was this translation helpful? Give feedback.

-

|

Can You guys add 3rd option to use just lama result? I can see that masked result from inpaint + lama is clean and it removes objects without issues while with 2 existing inpaint methods (only and +lama) you have to try a dozen times before it removes the object. |

Beta Was this translation helpful? Give feedback.

-

|

When using inpaint_only+lama pipeline,there is still random object present in the generated mask area! How can we solve this problem |

Beta Was this translation helpful? Give feedback.

-

|

Hi all, I want to share our recent model for image inpainting, PowerPaint. Importantly, it can effectively remove objects without the random object present. youtube: https://youtube.com/watch?v=7QsiY1JQUfg |

Beta Was this translation helpful? Give feedback.

-

|

sadly, no lama option for AMD users. at least not one i've come across yet... am I missing something? |

Beta Was this translation helpful? Give feedback.

-

|

We have recently developed a new version of PowerPaint, drawing inspiration from Brushnet. The Online Demo has been updated accordingly. In the coming days, we intend to make the model weights available as open source. The PowerPaint model possesses the ability to carry out diverse inpainting tasks, such as object insertion, object removal, shape-guided object insertion, and outpainting. Notably, we have retained the cross-attention layer that BrushNet had removed, which is essential for task prompt input.

|

Beta Was this translation helpful? Give feedback.

-

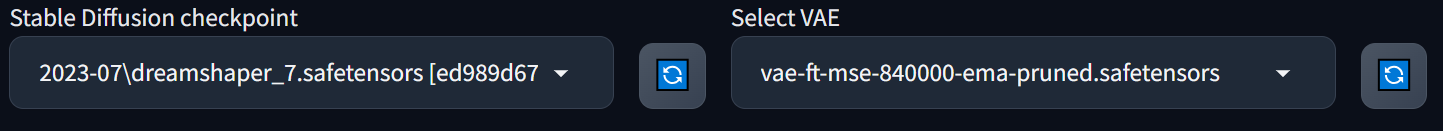

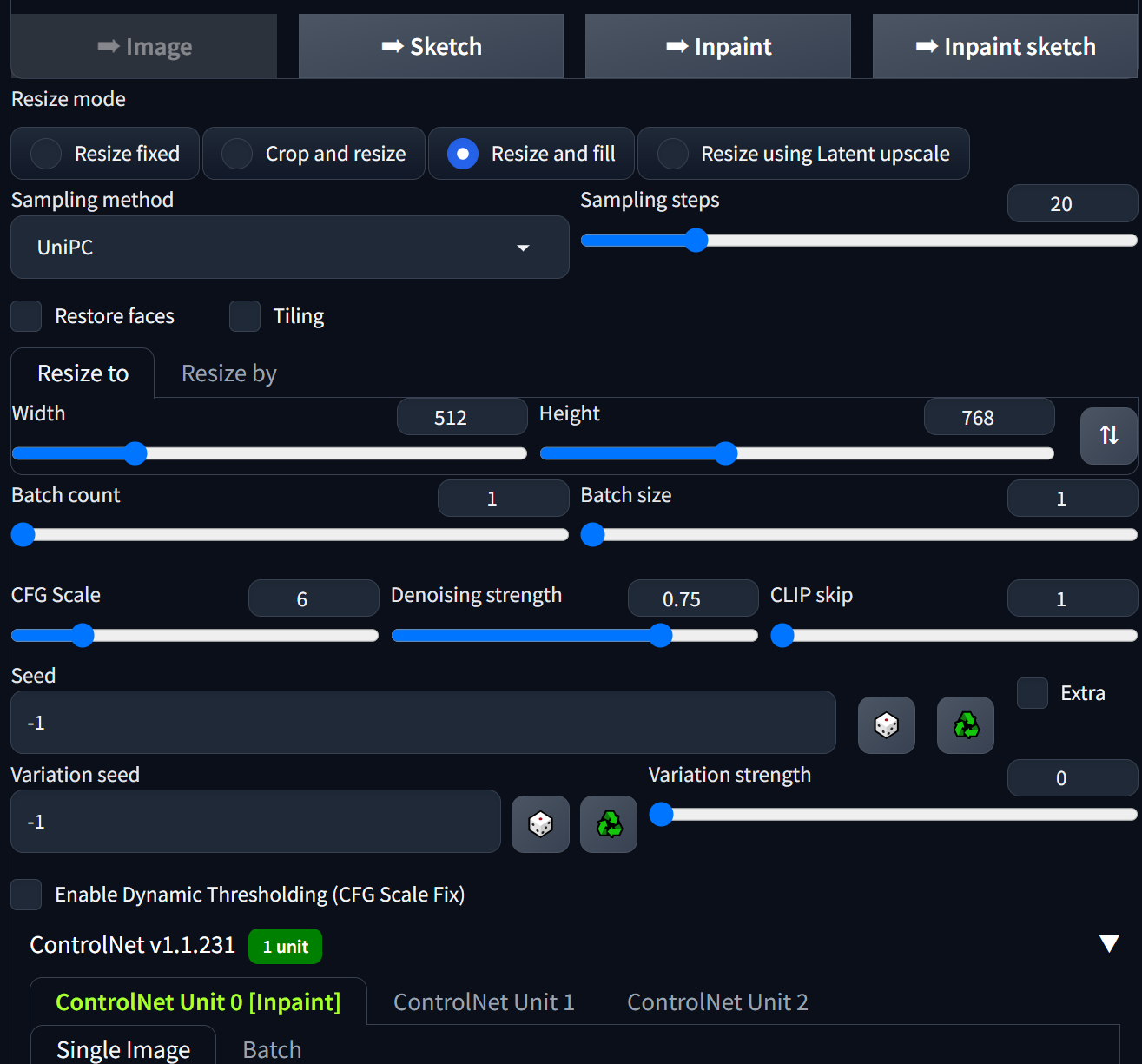

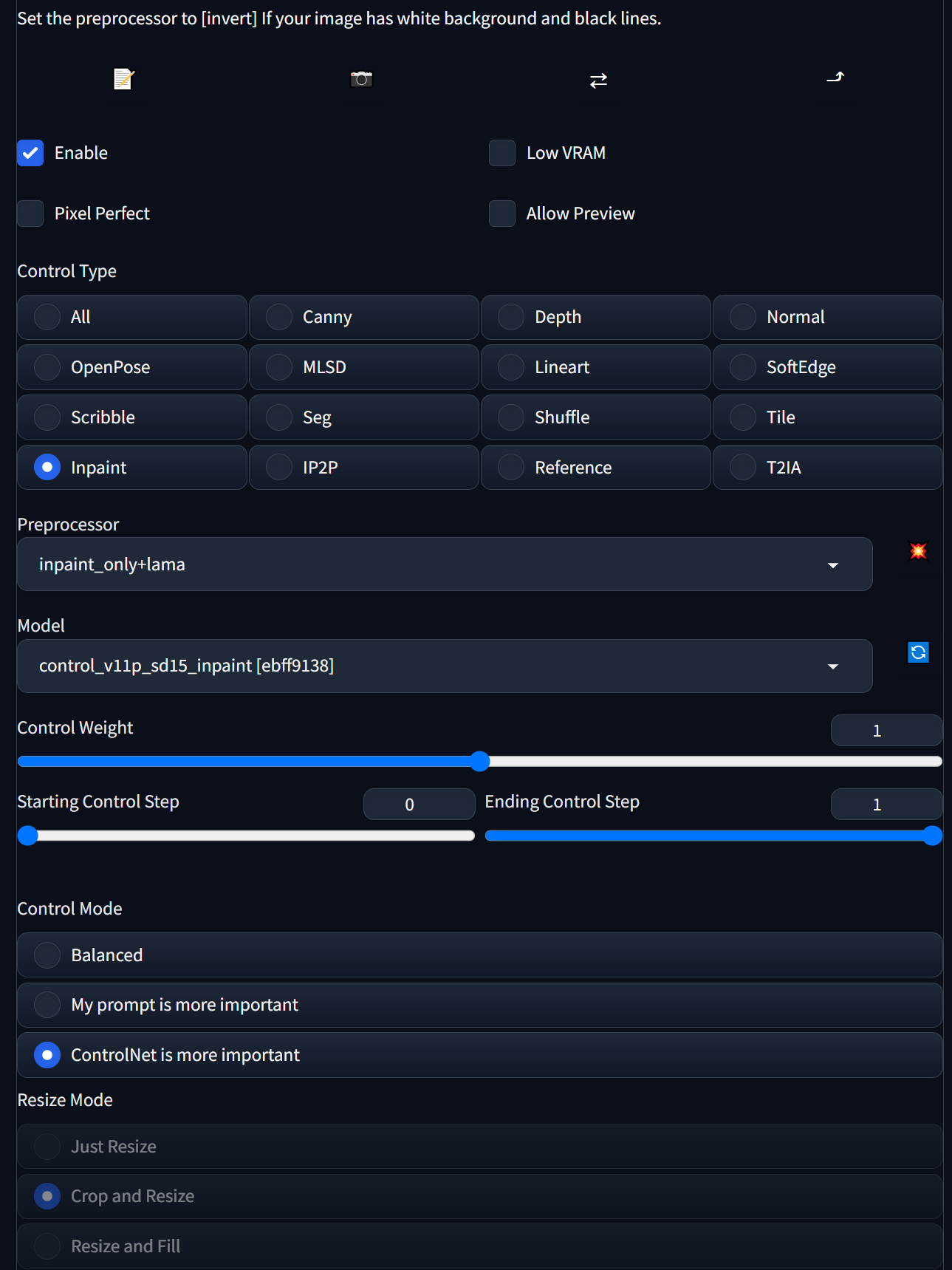

1.1.222 added a new inpaint preprocessor: inpaint_only+lama

LaMa: Resolution-robust Large Mask Inpainting with Fourier Convolutions (Apache-2.0 license)

Roman Suvorov, Elizaveta Logacheva, Anton Mashikhin, Anastasia Remizova, Arsenii Ashukha, Aleksei Silvestrov, Naejin Kong, Harshith Goka, Kiwoong Park, Victor Lempitsky

(Samsung Research and EPFL)

The basic idea of "inpaint_only+lama" is inspired by Automaic1111’s upscaler design: use some other neural networks (like super resolution GANs) to process images and then use Stable Diffusion to refine and generate the final image.

We bring the similar idea to inpaint. When you use the new inpaint_only+lama preprocessor, your image will be first processed with the model LAMA, and then the lama image will be encoded by your vae and blended to the initial noise of Stable Diffusion to guide the generating.

The results from inpaint_only+lama usually looks similar to inpaint_only but a bit “cleaner”: less complicated, more consistent, and fewer random objects. This makes inpaint_only+lama suitable for image outpainting or object removal.

Comparison

Input image (this image is from Stability's post about Clipdrop)

Configuration:

(The Resize Mode "Resize and Fill" enables outpaint)

Results:

inpaint_only

(9 non-cherry-picked raw outputs)

inpaint_only+lama

(9 non-cherry-picked raw outputs)

You can see that inpaint_only+lama is a bit "cleaner" than inpaint_only (and also a little bit more robust).

You need at least 1.1.222 to use this feature.

Beta Was this translation helpful? Give feedback.

All reactions